Since its inception, OpenAI has consistently used the same naming system for ChatGPT and its various versions.

After releasing the latest model, ChatPGT-4o Mini, OpenAI Chief Sam Altman admitted that ChatGPT needs a new naming scheme. Since its inception, OpenAI has consistently used the same naming system for ChatGPT and its various versions.

On July 18, OpenAI announced a new model, calling it “our most cost-efficient small model.” The CEO shared details on his X account (formerly Twitter), stating, “15 cents per million input tokens, 60 cents per million output tokens, 82% MMLU, and fast. Most importantly, we think people will really, really like using the new model.”

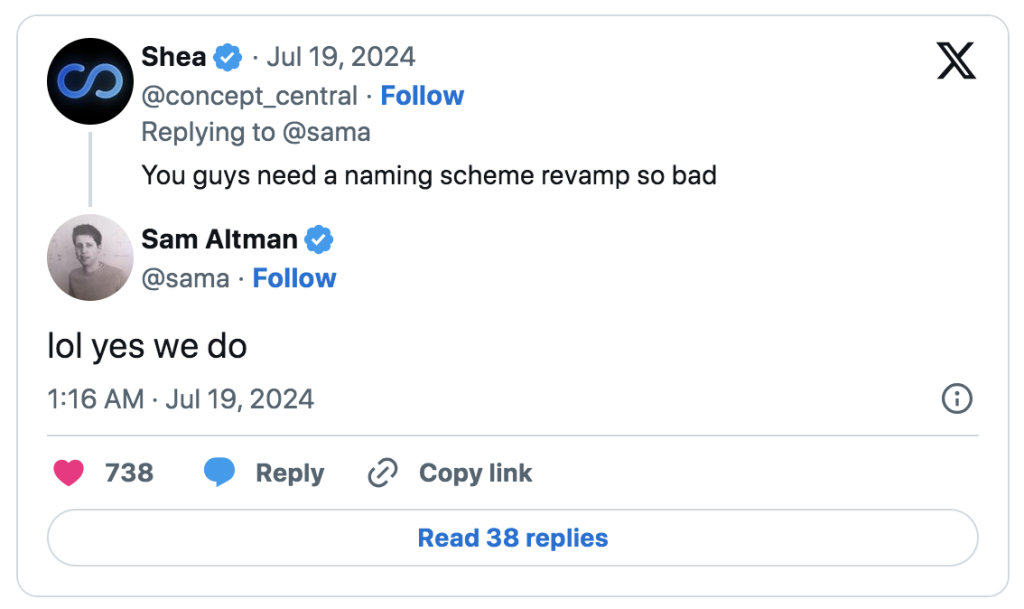

While many users appreciated the product, one user joked that the ChatGPT model names, which have grown longer as OpenAI has progressed, needed to be updated. Shea replied to Mr. Altman’s post, saying, “You guys need a naming scheme revamp so bad.” The entrepreneur acknowledged the comment and responded, “lol yes we do.”

One of OpenAI’s most efficient AI models, GPT-4o Mini, is compact and offers minimal latency (response time). OpenAI states that it will support text, images, videos, and audio, in addition to the current API’s text and vision (image processing) capabilities. The model has a context window of 128K tokens, supports up to 16K output tokens per request, and contains knowledge up to October 2023. The improved tokenizer shared with GPT-4o makes handling non-English text more cost-effective.

According to OpenAI’s Preparedness Framework, the company used both automated and human evaluations for safety. They also assessed the AI model with 70 external experts from various fields to identify potential risks.