Speculation is swirling as users notice enhanced capabilities and responsiveness.

It looks like ChatGPT is running on turbo.

Since its launch in November 2022, ChatGPT has been the leading AI platform, with new models and regular UI updates. However, has OpenAI also made behind-the-scenes improvements to GPT-4 that have enhanced its responsiveness?

I’ve been working extensively with Anthropic’s Claude lately. It’s no secret that I’m a big fan of the model’s Artifacts and its responses. Claude typically provides more detailed outputs, grasps what I need from a single prompt, and is faster, thanks to Sonnet 3.5.

I frequently switch between platforms like Llama on Groq, Google Gemini, and the various models available on Poe. Lately, I’ve noticed that ChatGPT has become as effective as Claude with Sonnet 3.5 was at its launch, especially for longer tasks.

Just in the past week, I’ve used ChatGPT to build an entire iOS app in an hour, rewrite multiple letters, and create shot-by-shot plans for AI video projects—all with ease. It handles every task smoothly, responds quickly, and is more creative than ever.

How can you tell it’s better?

While new models like GPT-4o from OpenAI grab the spotlight, the company often releases fine-tuned versions of existing models that significantly boost performance. These updates usually go unnoticed outside developer circles, as the labeling in ChatGPT remains unchanged.

Last week, GPT-4o received an upgrade, introducing a new model called GPT-4o-2024-08-06 for developers. The main benefits are cheaper API calls and faster responses, but each update also brings overall improvements through fine-tuning.

These updates were likely applied to ChatGPT as well, as it makes sense for OpenAI to use the most cost-efficient version of GPT-4o in its public chatbot.

While it may not have the fanfare of a new GPT-4o launch, these updates have resulted in subtle improvements. I also suspect there have been some back-end infrastructure changes that contribute to longer outputs and faster responses, beyond just the model updates.

How do you know it is better?

My assessment is entirely based on my experience with ChatGPT over the past week. I’ve given it the same types of queries I’ve used with Claude and ChatGPT, and it seems faster.

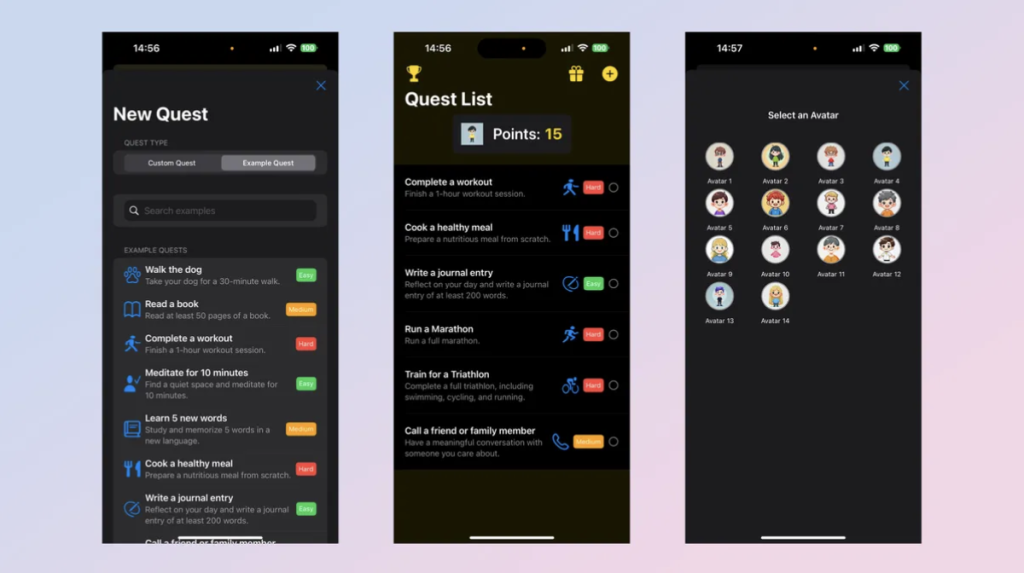

For instance, when handling very long code blocks, I built an iPhone To-Do list app that uses gamification to encourage task completion. This often required multiple messages for each block of code, and in the past, if the response was too long, ChatGPT would truncate it.

Previously, ChatGPT would expect you to extract elements and integrate them into your own code. Recently, however, it has been providing entire code blocks for every update request without needing a prompt.

ChatGPT handles this across multiple messages but uses the smart ‘continue generating’ feature, so the code appears as a single, continuous block rather than being split across messages, which often disrupted the code layout or structure.

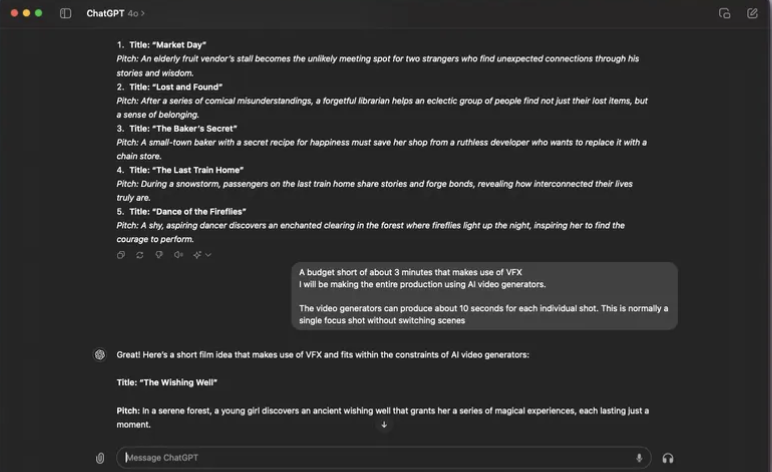

I’ve also noticed that ChatGPT is more creative in responding to tasks like “come up with 5 ideas for a short film about ordinary people” or “rewrite this letter for a specific audience.”

Although I can’t confirm that ChatGPT has received an upgrade, it definitely seems to have improved in performance compared to two weeks ago, and I’ve been using it more frequently than I have in a while.