OpenAI o1 stands out for its remarkable ability to “think,” but it may not be suitable for everyone—at least not right now.

OpenAI introduced its latest AI model, o1, last week, following much speculation about post-GPT-4 models with names like “Strawberry,” “Orion,” and “Q*,” as well as the anticipated “GPT-5.” This new model aims to advance AI by improving reasoning skills and solving complex scientific problems.

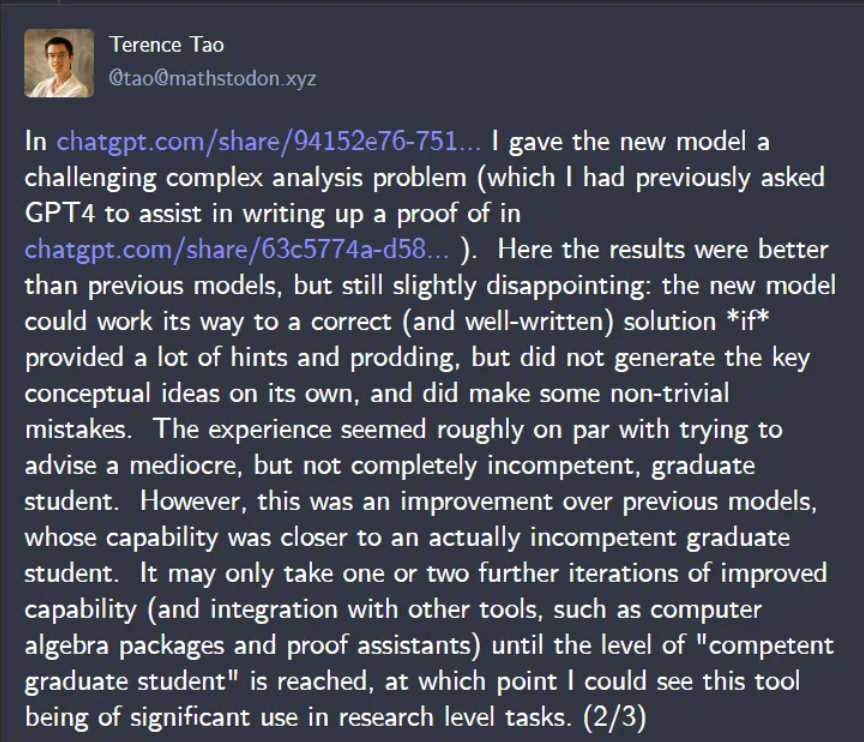

Developers, cybersecurity professionals, and AI enthusiasts are actively discussing the potential impact of o1. While many view it as a major advancement in AI, some with deeper knowledge of the model urge caution. OpenAI’s Joanne Jang emphasized early on that o1 “isn’t a miracle model.”

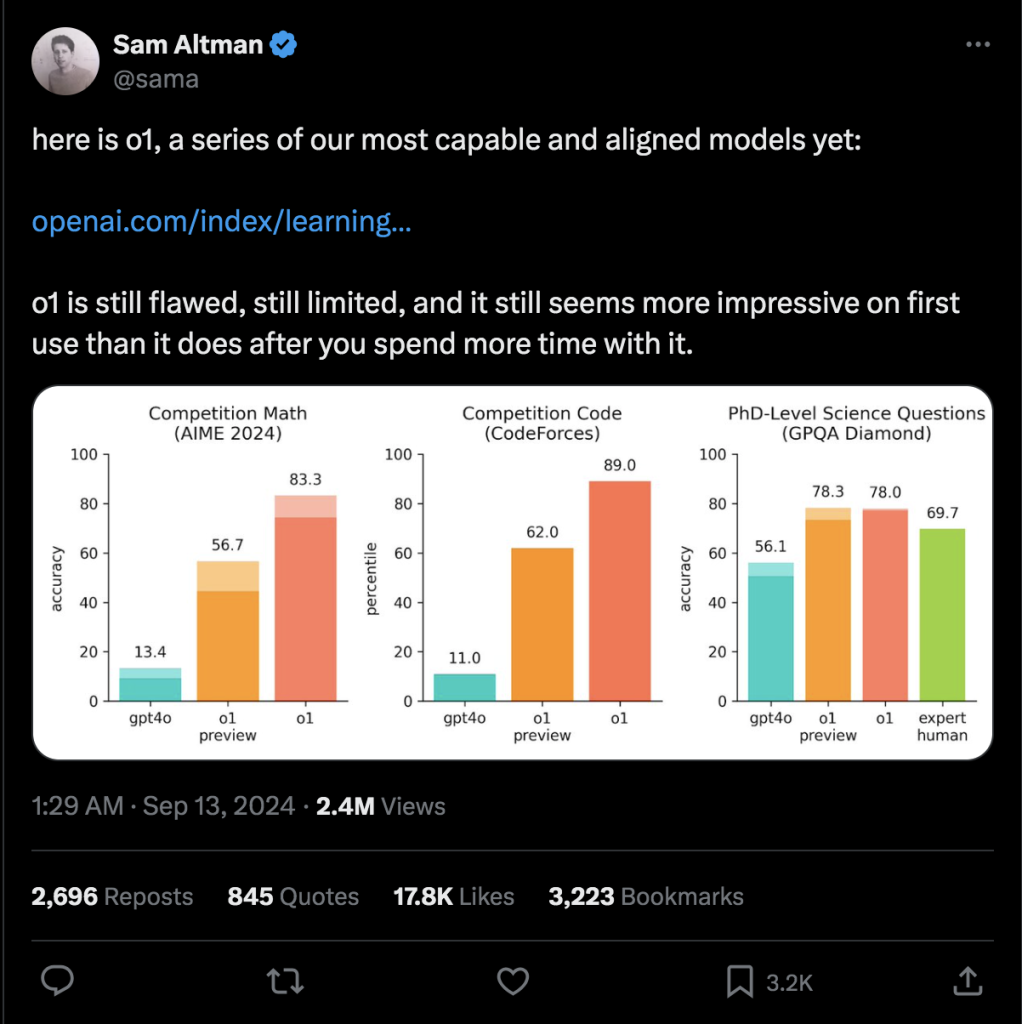

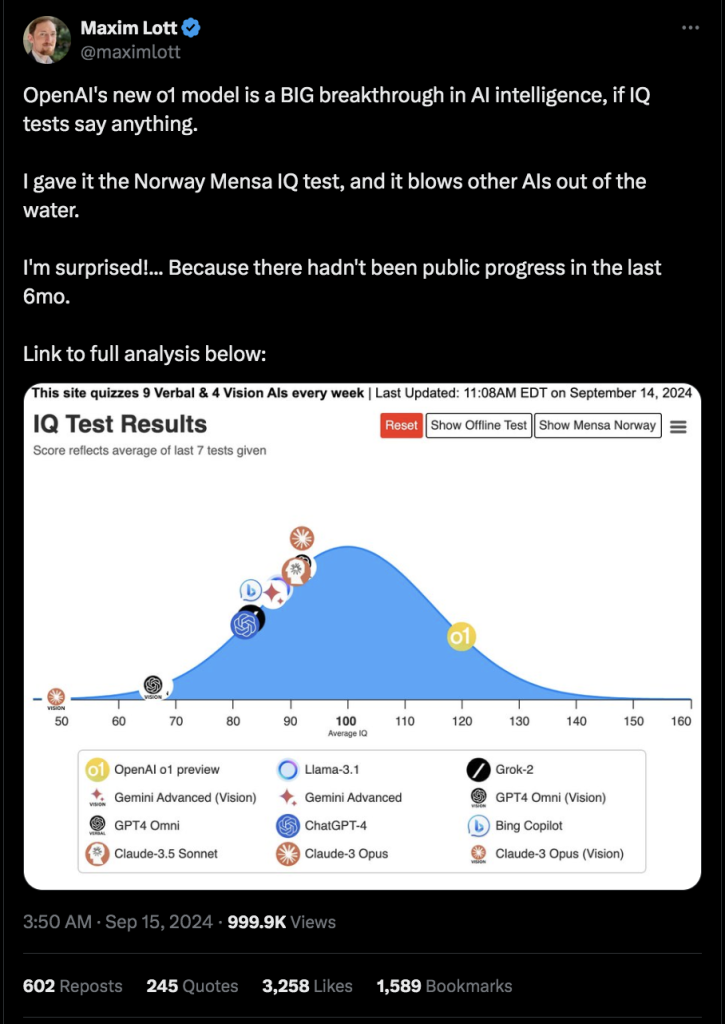

o1 represents a focused attempt to address challenges that have long puzzled AI systems, particularly those involving vague or inaccurate prompts. It aims to surpass previous models—and sometimes even human experts—in handling complex coding tasks and intricate mathematical problems, especially where advanced reasoning is required.

However, OpenAI o1 isn’t without its flaws. It faces performance limitations, struggles with versatility, and raises ethical concerns, which might make you reconsider selecting it as your go-to model from the wide range of available LLMs.

We’ve experimented with o1-preview and o1 mini, though only briefly due to the weekly interaction limits of 30 or 50, depending on the model. From this, we’ve compiled a list of what we like, dislike, and find concerning about the model.

The Good

Reasoned thinking

o1’s standout feature is its exceptional problem-solving ability. According to OpenAI, it frequently outperforms human PhD-level expertise in solving specific problems, particularly in fields like biology and physics that demand high precision. This makes it an invaluable asset for researchers tackling complex scientific challenges and analyzing vast datasets.

Spontaneous Chain of Reasoning

One of the most fascinating aspects of o1 is its “Chain of Thought” processing method. This technique enables the AI to decompose complex tasks into smaller, more manageable steps, evaluating the potential outcomes of each step before arriving at the optimal solution.

It’s similar to observing a chess grandmaster analyze a game move by move or going through a detailed reasoning process before making a decision.

In summary: o1’s logical and reasoning abilities are exceptional.

Programming and evaluation

OpenAI o1 excels in programming tasks. It proves versatile across various professional contexts, from educational purposes and real-time code debugging to scientific research.OpenAI focused significantly on enhancing o1’s coding capabilities, making it more powerful and adaptable than previous models. It now better understands user intentions before translating tasks into code.

While other models are proficient at coding, applying Chain of Thought during a coding session boosts productivity and enables the model to handle more complex tasks.

Security against unauthorized access

OpenAI has not ignored the ethical aspects of AI development. The company has integrated built-in filtering systems into o1 to prevent harmful outputs. While some might view these safeguards as restrictive or unnecessary, large businesses—facing the repercussions and responsibilities of AI actions—may appreciate a safer model that avoids recommending dangerous actions, producing illegal content, or being manipulated into making decisions that could lead to financial losses.

OpenAI asserts that the model is built to resist “jailbreaking,” or attempts to circumvent its ethical safeguards—a feature likely to appeal to security-focused users. However, past beta versions fell short in this regard, though the official release candidate might perform better.

The Bad

It’s slooooow

The model shows slower performance compared to its faster counterpart, GPT-4o. The “mini” versions were specifically designed for speed, highlighting o1’s lack of responsiveness, which makes it less suitable for tasks requiring quick interactions or high-pressure settings.

Naturally, more powerful models require more computing time. However, part of this delay is due to the Chain of Thought reasoning process that o1 uses before delivering a response. The model “thinks” for about 10 seconds before beginning to generate its answers, and in some cases, tasks took over a minute of “thinking time” before the model provided a response in our tests.

Additionally, factor in extra time for the model to compose an extensive chain of thought before delivering even the simplest answer. This could be challenging if you don’t have a lot of patience.

It’s not multimodal—at least not yet.

o1 also offers a relatively basic set of features compared to models like GPT-4. It lacks several functionalities that developers rely on, such as memory functions, file upload capabilities, data analysis tools, and web browsing. For tech professionals used to a comprehensive AI toolkit, working with o1 might feel like a step down from a Swiss Army knife to a simple blade.

Additionally, those using ChatGPT for creative tasks won’t fully benefit from this model. It doesn’t support DALL-E and remains text-only, so it won’t cater to the needs of those seeking advanced multimedia capabilities.OpenAI has pledged to add those features in the future, but for now, a text-only chatbot may not be ideal for most users. Without DALL-E integration, o1’s capabilities are limited to text and lack the versatility that comes from combining text with image generation.

It’s lacking in creativity.

Yes, o1 excels in reasoning, coding, and complex logical tasks, but it struggles with creative tasks like writing a novel, enhancing literary texts, or proofreading creative stories.

OpenAI recognizes this limitation. Even within ChatGPT’s main interface, it notes that while GPT-4 is better for complex tasks, o1 is designed for advanced reasoning. As a result, o1, despite its innovations, falls short in more generalist applications compared to its predecessor, making it feel like a less significant advancement than anticipated.

The Ugly

It’s intentionally costly.

Resource consumption is a major drawback of o1. Its advanced reasoning capabilities come with significant financial and environmental costs. The model’s high demand for processing power increases operational expenses and energy usage, potentially making it unaffordable for smaller developers and organizations. This limits o1’s accessibility to those with substantial budgets and strong infrastructure.

Additionally, the model’s execution of on-inference Chain of Thought means that the tokens generated before arriving at a useful answer are not free—you’re charged for them.

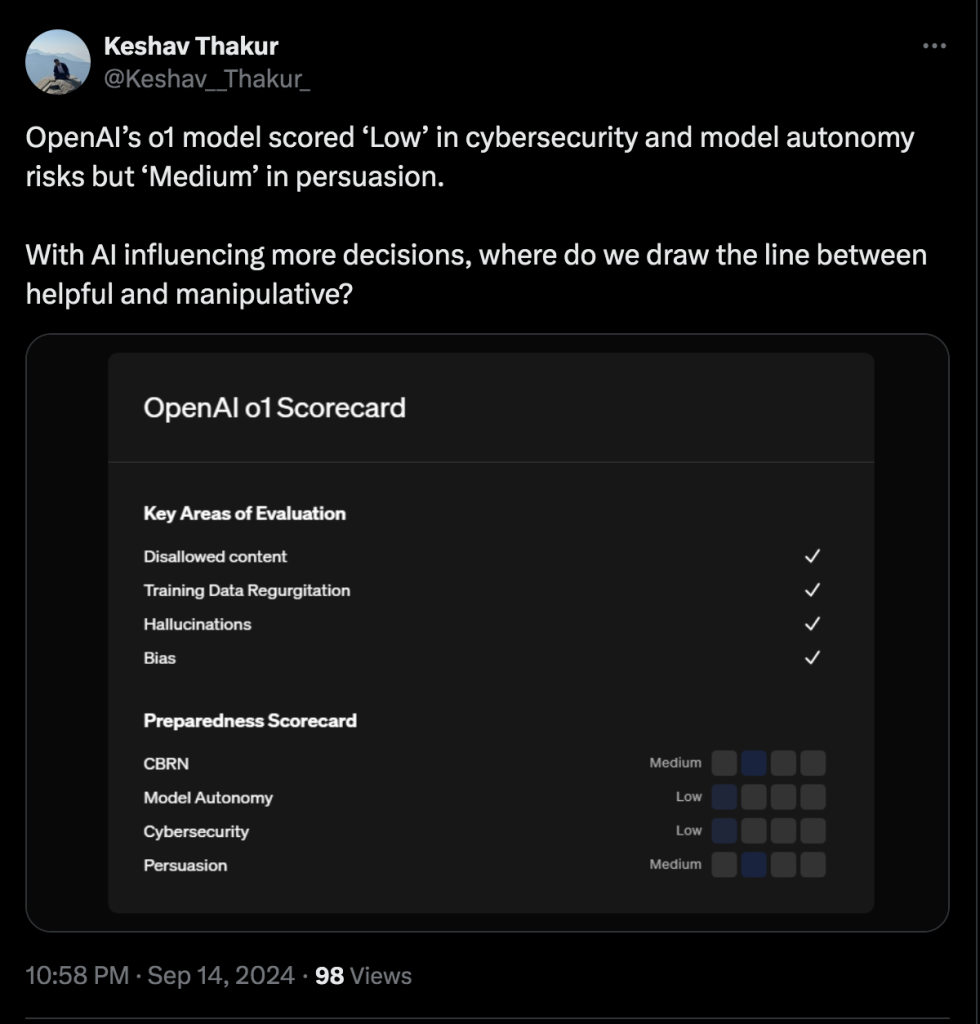

The design of the model results in high costs. For example, when we asked o1, “A test subject is sitting on a spacecraft traveling at the speed of light minus 1 m/s. During the journey, an object impacts the spacecraft, increasing its speed by 1.1 m/s. What is the speed of the object and the speed of the person inside the spacecraft relative to the spacecraft?” the expense was considerable.

It generated 1,184 tokens of processing before arriving at a 68-token final answer—which, incidentally, was incorrect.

Inconsistencies are probable.

Another issue is its inconsistent performance, especially in creative problem-solving tasks. The model often produces a series of incorrect or irrelevant responses before finally reaching the correct answer.

For instance, renowned mathematician Terrence Tao commented, “The experience was similar to advising a mediocre, yet not entirely incompetent, graduate student.”

However, due to its convincing Chain of Thought—which, to be honest, you might skip to get to the actual answer quickly—the subtle details can be difficult to discern. This is particularly true for those using it for problem-solving rather than merely testing or benchmarking its capabilities.

This unpredictability requires ongoing human supervision, which can undermine some of the efficiency benefits that AI is supposed to offer. It seems that the model doesn’t truly “think” but instead applies patterns from a vast dataset of problem-solving steps, mimicking how similar issues were addressed previously.

So, while AGI is still not here, it’s important to acknowledge that this is the closest we’ve come.

OpenAI is acting like a Big Brother.

This is another significant concern for those who prioritize their privacy. Since the Sam Altman controversy, OpenAI has been shifting towards a more corporate focus, prioritizing profitability over safety.The entire super alignment team was disbanded, the company began forming partnerships with the military, and now it is granting the government access to models before their deployment, as AI has rapidly become a matter of national interest.

Recently, there have been reports indicating that OpenAI is closely monitoring user prompts and manually reviewing their interactions with the model.

A notable case involves Pliny—arguably one of the most well-known LLM jailbreakers—who reported being locked out by OpenAI, which prevented his prompts from being processed by o1.

Other users have also reported receiving emails from OpenAI following their interactions with the model:

This is part of OpenAI’s standard procedures to enhance its models and protect its interests. According to an official statement, “When you use our services, we collect personal information contained in your inputs, file uploads, or feedback (‘content’).”

If client prompts or data are found to violate its terms of service, OpenAI can take measures to address the issue, including account suspension or reporting to authorities. Emails are one of the steps involved in this process.

However, actively controlling access to the model doesn’t necessarily enhance its ability to detect and address harmful prompts and limits its capabilities. Usability is constrained by OpenAI’s subjective decisions on which interactions are permitted.Furthermore, having a corporation monitor user interactions and uploaded data raises privacy concerns. This is exacerbated by OpenAI’s reduced focus on security and its increased proximity to government agencies.

That said, we might be overreacting. OpenAI does promise a zero data retention policy for enterprises that request it.Non-enterprise users can limit OpenAI’s data collection by adjusting two settings. Under the configuration tab, users should click on the data control option and disable the button labeled “Make this model better for everybody” to prevent data collection from their private interactions.

Additionally, users can visit the personalization settings and uncheck ChatGPT’s memory feature to stop data collection from conversations. By default, these options are enabled, allowing OpenAI to access user data until users choose to opt out.

Is OpenAI o1 the right choice for you?

OpenAI’s o1 model marks a departure from the company’s previous strategy of developing universally applicable AI. Instead, o1 is designed specifically for professional and technical use cases, with OpenAI clarifying that it is not intended to replace GPT-4 in every situation.This specialized focus means o1 is not a one-size-fits-all solution. It may not be suitable for those needing quick answers, straightforward factual responses, or creative writing help. Developers using API credits should also be mindful of o1’s resource-intensive nature, which could result in unexpected costs.

Nonetheless, o1 has the potential to revolutionize tasks that involve complex problem-solving and analytical thinking. Its ability to systematically break down intricate problems makes it particularly effective for analyzing complex data sets or tackling challenging engineering problems.o1 also streamlines workflows that previously required intricate multi-step prompts. For those tackling complex coding challenges, o1 holds considerable promise in debugging, optimization, and code generation, although it is designed to assist rather than replace human programmers.

Currently, OpenAI’s o1 is only a preview, with the official model expected later this year. It is anticipated to be multimodal and impressive across various domains, not just logical reasoning.OpenAI Japan CEO Tadao Nagasaki mentioned at the KDDI SUMMIT 2024 that the upcoming model, likely named “GPT Next,” is expected to evolve nearly 100 times more than its predecessors based on historical trends. “Unlike traditional software, AI technology grows exponentially. Therefore, we aim to support the creation of a world where AI is integrated as soon as possible,” Nagasaki said.

So, we can look forward to fewer headaches and more “Aha!” moments with the final version of o1—provided it doesn’t take too long to process.