This standoff highlights the intense competition and high stakes in the AI hardware arena.

Nvidia is renowned for its high-performance gaming GPUs and industry-leading AI GPUs. However, the trillion-dollar company is also known for tightly controlling the use of its GPUs outside its own operations. For instance, it imposes strict limitations on its AIB partners’ graphics card designs. Unsurprisingly, this level of control extends to AI customers like Microsoft. According to The Information, a dispute arose between Microsoft and Nvidia over the installation of Nvidia’s new Blackwell B200 GPUs in Microsoft’s server rooms.

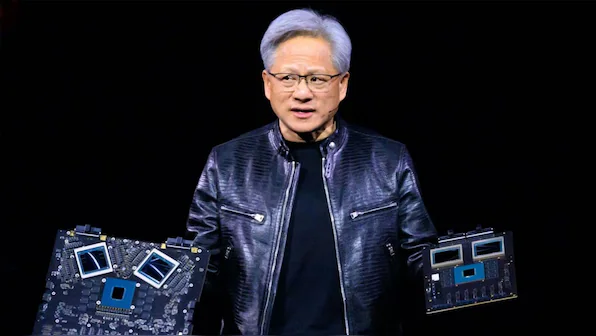

Nvidia has been aggressively expanding its presence in the data center market, as evidenced by the launch of the Blackwell B200 parts. During the presentation, Jensen Huang repeatedly emphasized that he no longer considers individual GPUs but views the entire NVL72 rack as a single GPU. This strategy aims to increase revenue from its AI products and includes influencing how customers install their new B200 GPUs.

In the past, customers had to purchase and assemble appropriate server racks for the hardware. Now, Nvidia is encouraging customers to buy individual racks and even entire SuperPods directly from Nvidia. The company argues that this will enhance GPU performance, and there’s some validity to this claim given the complex interconnections between GPUs, servers, racks, and SuperPods. However, this also involves significant financial transactions, especially when building data centers on a large scale.

While Nvidia’s smaller customers may be satisfied with its offerings, Microsoft was not. According to reports, Nvidia VP Andrew Bell asked Microsoft to purchase a server rack design specifically for the new B200 GPUs, which had a form factor slightly different from Microsoft’s existing server racks used in its data centers.

Microsoft resisted Nvidia’s suggestion, pointing out that the new server racks would hinder its ability to easily switch between Nvidia’s AI GPUs and competitors like AMD’s MI300X GPUs. Eventually, Nvidia conceded and allowed Microsoft to use its own custom server racks for the B200 AI GPUs. However, this is likely not the last conflict we’ll see between these two major corporations.

Nvidia supports both OCP’s Open Rack (21-inch) and standard 19-inch EIA racks for MGX. Microsoft seems to use OCP racks, which are newer and better for density. Microsoft’s existing infrastructure likely works well, and upgrading it all would be very costly.

This dispute highlights how large and valuable Nvidia has become over the past year. Earlier this week, Nvidia briefly became the most valuable company, a title that will likely shift many times in the coming months and years. Beyond server racks, Nvidia also controls GPU inventory allocation to maintain demand and leverages its dominant AI position to promote its own software and networking systems, reinforcing its market leadership.

Nvidia is reaping huge benefits from the AI boom, which took off when ChatGPT became popular a year and a half ago. Since then, Nvidia’s AI-focused GPUs have become its most in-demand and highest income-generating products, leading to tremendous financial success. Nvidia’s stock prices have skyrocketed, currently over eight times higher than at the start of 2023 and more than 2.5 times higher than at the beginning of 2024.

Nvidia is effectively utilizing this increased income. Its latest AI GPU, the Blackwell B200, is set to be the fastest graphics processing unit for AI workloads globally. A single GB200 Superchip offers up to 20 petaflops of compute performance (for sparse FP8), making it theoretically five times faster than its H200 predecessor. The GB200 consists of two B200 GPUs and a Grace CPU on a large daughterboard, with the B200 itself using two large dies linked together. The ‘regular’ B200 GPU provides up to 9 petaflops FP4 (with sparsity), still a significant computing capability. It also delivers up to 2.25 petaflops of dense compute for FP16/BF16, crucial for AI training workloads.

As of June 20th, 2024, Nvidia’s stock has dipped slightly from its high of $140.76, closing at $126.57. The future will reveal how the stock performs once the Blackwell B200 begins mass shipping. Meanwhile, Nvidia remains fully committed to advancing its AI efforts.